/

COVOPRIM – Comparing Voice Perception in Humans and Other Primates

COVOPRIM (Comparative Studies of Voice Perception in Primates) is a research project funded by an Advanced Grant of the European Research Council in 2018.

Why study voices?

Voices from other individuals of the same species are among the most important sounds for primates—humans and non-humans alike. They carry rich information that helps us recognize individuals, understand emotions, and interact socially. Over millions of years, primates have developed specialized brain systems that allow them to process these sounds with remarkable precision.

What is COVOPRIM about?

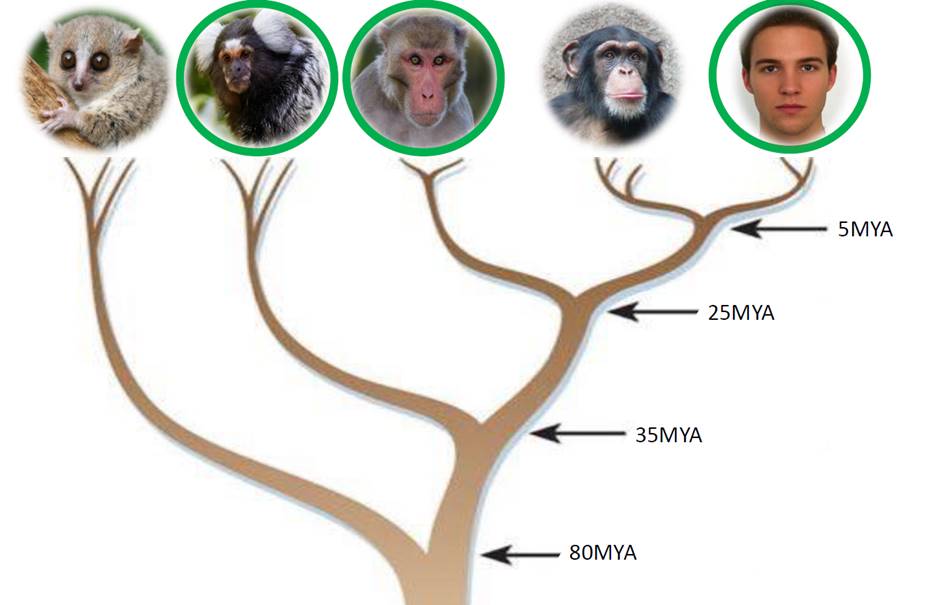

COVOPRIM explores how humans and other primates—baboons, macaques, and marmosets—perceive and process voices. By comparing species, we aim to reconstruct the recent evolutionary history of voice perception:

- Similarities in how voices are processed could mean that these brain mechanisms already existed in our common ancestor tens of millions of years ago.

- Differences could reveal how humans developed unique abilities such as speech and language in the last tens of thousands of years.

Why does this matter?

Understanding the roots of voice perception is key to:

- learning how speech and language emerged,

- improving treatments for communication disorders,

- and inspiring the next generation of brain-computer interfaces.

How do we study it?

We use the ‘comparative approach’ , the same scientific methods across humans and other primates to allow direct comparisons:

- Behavioral experiments (WP1) – Monkeys take part in thousands of “voice games” on touchscreens, motivated by small food rewards. This lets us measure how they perceive and recognize voices while staying in their social groups.

- Brain imaging (WP2) – Both humans and monkeys undergo non-invasive brain scans (fMRI) using the same equipment and protocols, showing us which brain areas are active when hearing voices.

- Single-neuron studies (WP3) – In a few trained monkeys, we use advanced electrodes to record the activity of individual brain cells in voice-sensitive regions, to see how the brain analyzes voices in the finest detail.

How do we care for our monkeys?

The well-being of our animals is at the heart of COVOPRIM. All studies follow the strictest European and national ethical regulations, with no severe or terminal procedures. Our macaques and marmosets live in stable family or pair groups enriched with toys and activities, ensuring natural social life. Participation in experiments is entirely voluntary: touchscreens and testing modules are available inside the housing, and monkeys can choose to play the “voice games” at their own pace. Rewards are small treats added on top of their regular diet—never as a substitute. When advanced methods such as brain imaging or neuronal recordings are used, they are carried out under full anaesthesia, with reversible procedures that allow animals to return safely to their groups. In short, our goal is to combine cutting-edge science with the highest standards of care, respect, and enrichment for the primates who make this research possible.