PUBLICATIONS

2025

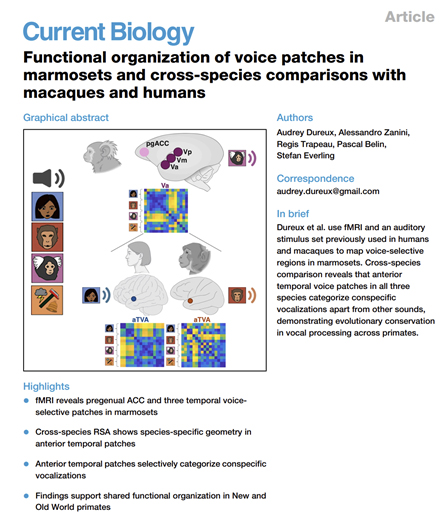

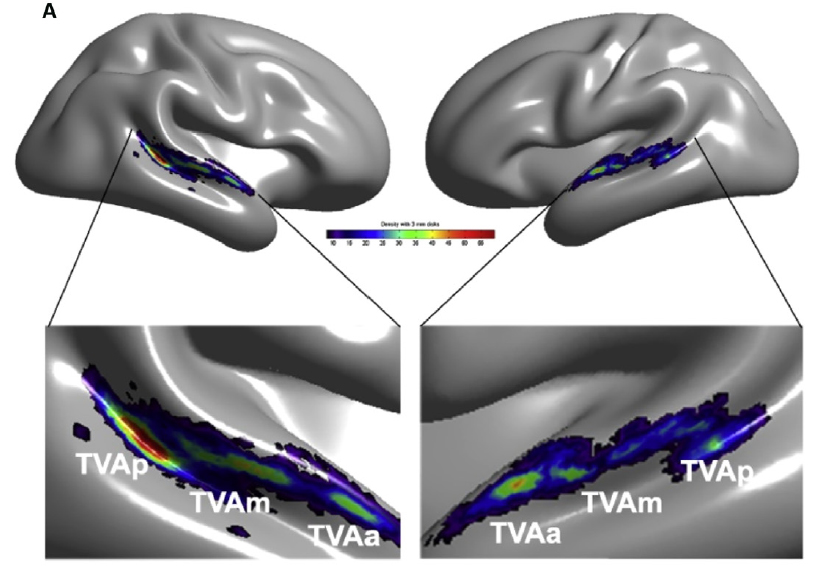

Functional organization of voice patches in marmosets and cross-species comparisons with macaques and humans

Current Biology

We recently identified voice-selective patches in the marmoset auditory cortex, but whether these regions specifically encode conspecific vocalizations over heterospecific ones—and whether they share a similar functional organization with those of humans and macaques—remains unknown. In this study, we used ultra-high-field functional magnetic resonance imaging (fMRI) in awake marmosets to characterize the cortical organization of vocalization processing and directly compare it with prior human and macaque data. Using an established auditory stimulus set designed for cross-species comparisons—including conspecific, heterospecific (macaque and human), and non-vocal sounds—we identified voice-selective patches showing preferential responses to conspecific calls. Robust responses were found in three temporal voice patches (anterior, middle, and posterior) and in the pregenual anterior cingulate cortex (pgACC), all showing significantly stronger responses to conspecific vocalizations than to other sound categories. A key finding was that, while the temporal patches also showed weak responses to heterospecific calls, the pgACC responded exclusively to conspecific vocalizations. Representational similarity analysis (RSA) revealed that dissimilarity patterns across these patches aligned exclusively with the marmoset-specific categorical model, indicating species-selective representational structure. Cross-species RSA comparisons revealed conserved representational geometry in the primary auditory cortex (A1) but species-specific organization in anterior temporal areas. These findings highlight shared principles of vocal communication processing across primates.

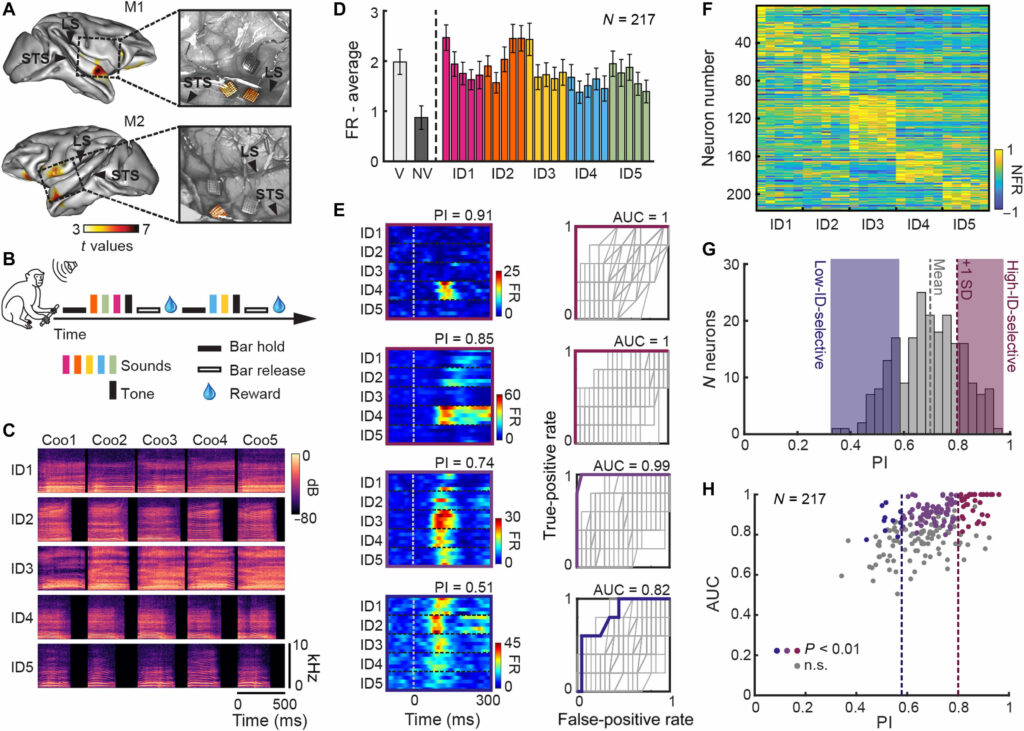

Voice identity invariance by anterior temporal lobe neurons

Science Advances

The ability to recognize speakers by their voice despite acoustical variation plays a substantial role in primate social interactions. Although neurons in the macaque anterior temporal lobe (ATL) show invariance to face viewpoint, whether they also encode abstract representations of caller identity is not known. Here, we demonstrate that neurons in the voice-selective ATL of two macaques support invariant voice identity representations through dynamic population-level coding. These representations minimize neural distances across different vocalizations from the same individual while preserving distinct trajectories for different callers. This structure emerged from the coordinated activity of the broader neuronal ensemble, although a small subset of highly identity-selective neurons carried high identity information. Our findings provide a neural basis for voice identity recognition in primates and highlight the ATL as a key hub for integrating perceptual voice features into higher-level identity representations.

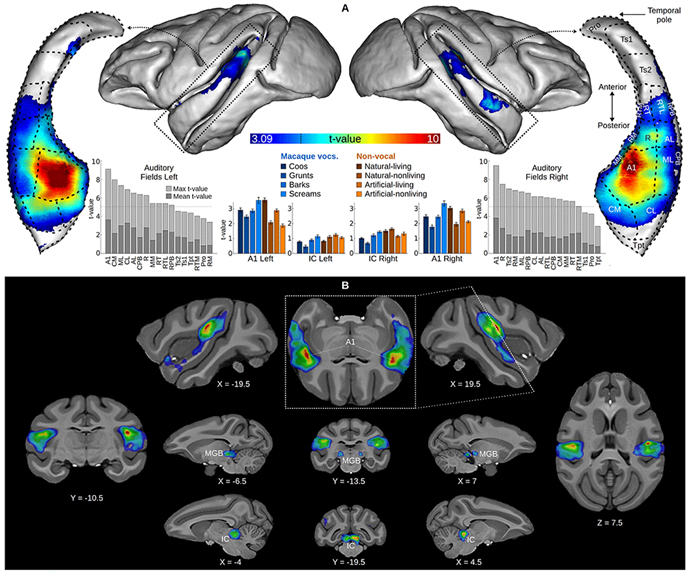

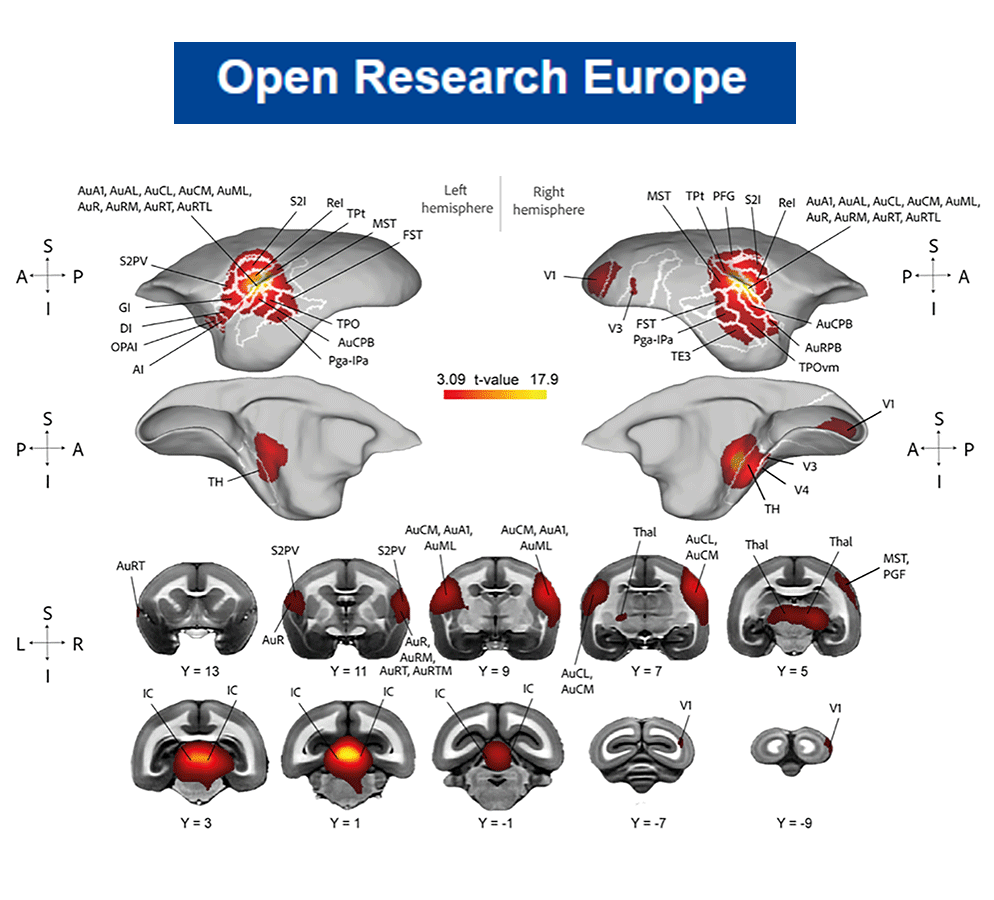

Functional mapping of auditory responses to complex sounds under light anesthesia in rhesus macaques

Open Research Europe

Eleven animals were scanned while listening to macaque vocalizations and non-vocal sounds. Robust activation was observed in primary and belt auditory cortices as well as subcortical structures, indicating preserved auditory responsiveness under anesthesia.

A large annotated dataset of vocalizations by common marmosets

Scientific Data

Non-human primates, our closest relatives, use a wide range of complex vocal signals for communication within their species. Previous research on marmoset (Callithrix jacchus) vocalizations has been limited by sampling rates not covering the whole hearing range and insufficient labeling for advanced analyses using Deep Neural Networks (DNNs). Here, we provide a database of common marmoset vocalizations, which were continuously recorded with a sampling rate of 96 kHz from an animal holding facility housing simultaneously ~20 marmosets in three cages. The dataset comprises more than 800,000 files, amounting to 253 hours of data collected over 40 months.

Investigating the cortical mechanisms of conspecific voice perception in anesthetized marmosets using 3T fMRI

Open Research Europe

Fifteen (n=15) adult marmosets were scanned with 3T fMRI using a 16-channel marmoset head coil and a dedicated ‘clustered-sparse’ sampling design during auditory stimulation with conspecific vocalizations and scrambled control stimuli while under sevoflurane-induced anesthesia.

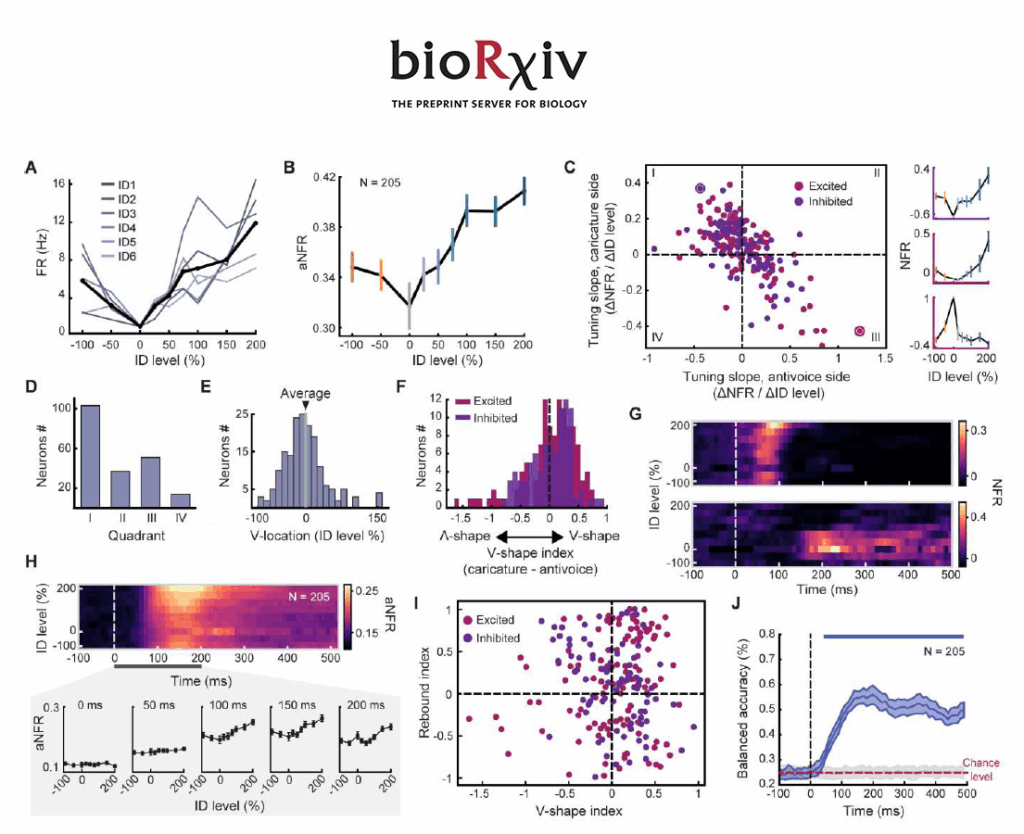

Dual temporal codes for voice identity in the primate auditory cortex

Preprint

We investigated whether neurons in the anterior temporal voice area (aTVA) of macaques use norm-based coding (NBC), similar to face encoding in vision. Using synthetic vocalizations morphed along identity trajectories, we found that early neuronal responses (100 to 150 ms) exhibited V-shaped tuning, with minimal activity for the average voice and increased responses for extreme identities. Later (200 ms), a distinct rebound in activity for the average voice emerged, driven by a unique subpopulation

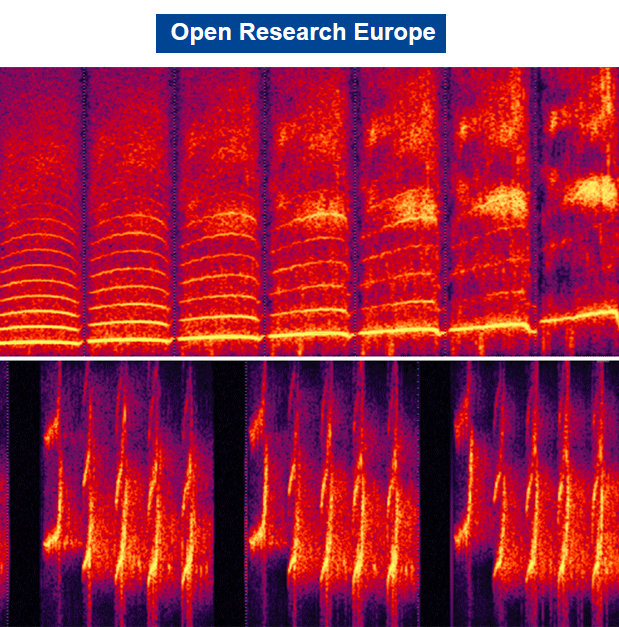

STRAIGHTMORPH: A Voice Morphing Tool for Research in Voice Communication Sciences

Open Research Europe

The purpose of this paper is to make easily available to the scientific community an efficient voice morphing tool called STRAIGHTMORPH and provide a short tutorial on its use with examples. STRAIGHTMORPH consists of a set of Matlab functions allowing the generation of high-quality, parametrically-controlled morphs of an arbitrary number of voice samples. A first step consists in extracting an ‘mObject’ for each voice sample, with accurate tracking of the fundamental frequency contour and manual definition of Time and Frequency anchors corresponding across samples to be morphed. The second step consists in parametrically combining the mObjects to generate novel synthetic stimuli, such as gender, identity or emotion continua, or random combinations. STRAIGHTMORPH constitutes a simple but efficient and versatile tool to generate high quality, parametrically controlled continua between voices – and beyond.

The cerebral architecture of voice information processing

Encyclopedia of the Human Brain

Voices are information-rich “auditory faces” that the human brain is expert at processing • The three main types of voice information: speech, identity, affect are processed in interacting, but dissociable functional pathways (as for faces) The Temporal Voice Areas (TVAs) constitute crucial nodes in the network of cortical regions processing voice information • The TVAs categorize conspecific vocalizations apart from other sounds • Evidence of TVAs in the rhesus macaque suggests a long evolutionary history of the vocal brain.

2024

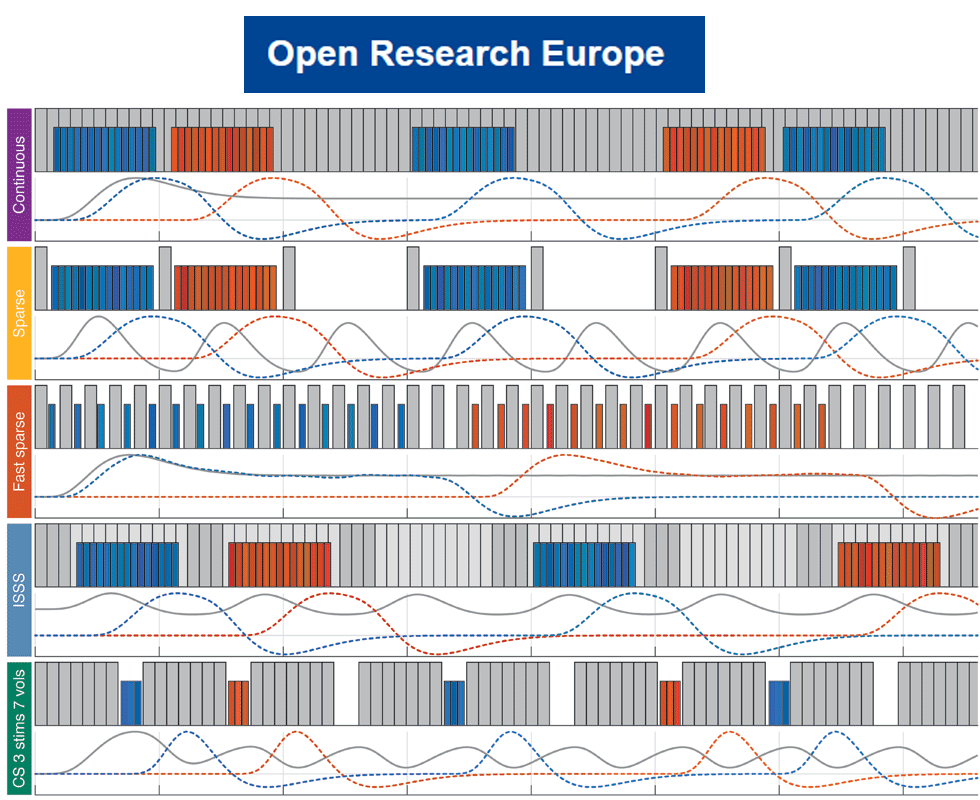

Comparison of auditory fMRI protocols for a voice localizer

Open Research Europe

This study systematically evaluates five different fMRI protocols—continuous, sparse, fast sparse, clustered sparse, and interleaved silent steady state (ISSS)—to determine their effectiveness in capturing auditory and voice-related brain activity under identical scanning conditions. Results showed that continuous imaging produced the largest and highest auditory activation, followed closely by clustered sparse sampling. Both sparse and fast sparse sampling yielded intermediate results, with fast sparse sampling performing better at detecting voice-specific activation. ISSS had the lowest activation sensitivity. The results highlight that continuous imaging is optimal when participants are well protected from scanner noise, while clustered sparse sequences offer the best alternative when stimuli are to be presented in silence.

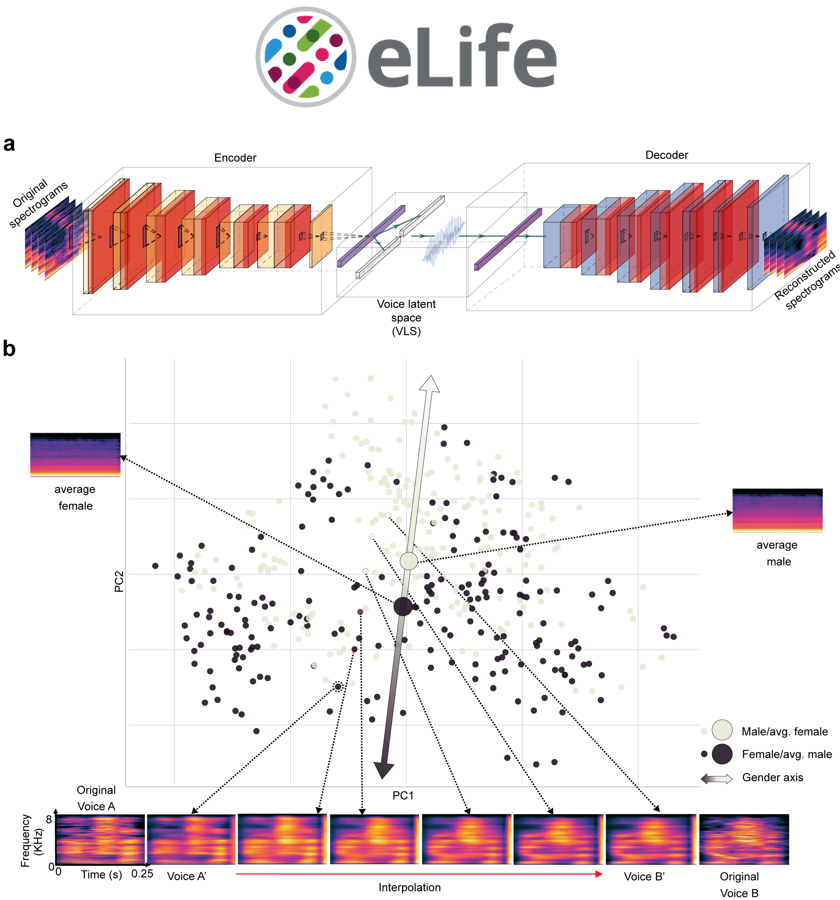

Reconstructing Voice Identity from Noninvasive Auditory Cortex Recordings

eLife preprint

The cerebral processing of voice information is known to engage, in human as well as non-human primates, “temporal voice areas” (TVAs) that respond preferentially to conspecific vocalizations. However, how voice information is represented by neuronal populations in these areas, particularly speaker identity information, remains poorly understood. Here, we used a deep neural network (DNN) to generate a high-level, small-dimension representational space for voice identity—the ‘voice latent space’ (VLS)—and examined its linear relation with cerebral activity via encoding, representational similarity, and decoding analyses. We find that the VLS maps onto fMRI measures of cerebral activity in response to tens of thousands of voice stimuli from hundreds of different speaker identities and better accounts for the representational geometry for speaker identity in the TVAs than in A1. Moreover, the VLS allowed TVA-based reconstructions of voice stimuli that preserved essential aspects of speaker identity as assessed by both machine classifiers and human listeners. These results indicate that the DNN-derived VLS provides high-level representations of voice identity information in the TVAs.

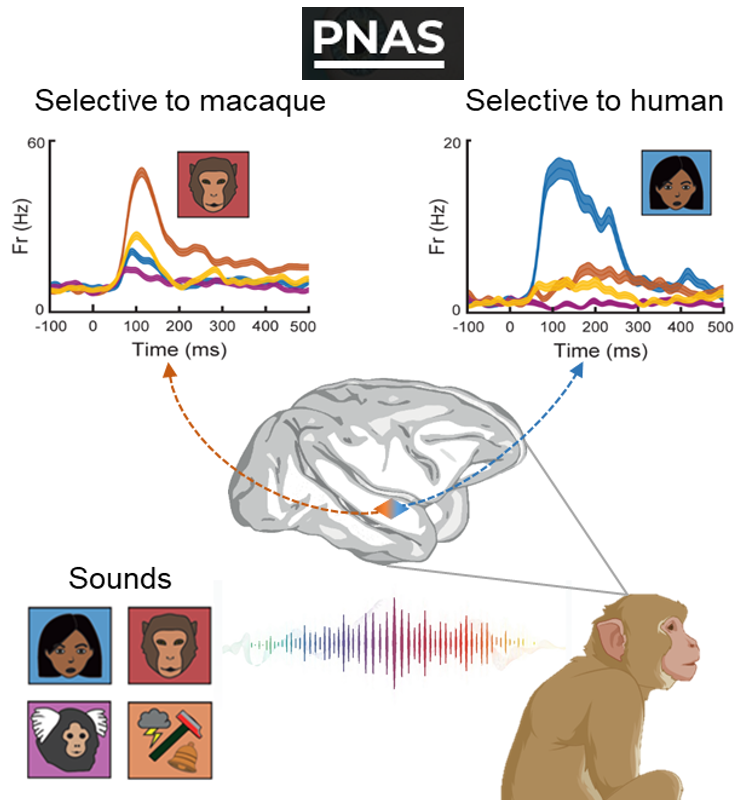

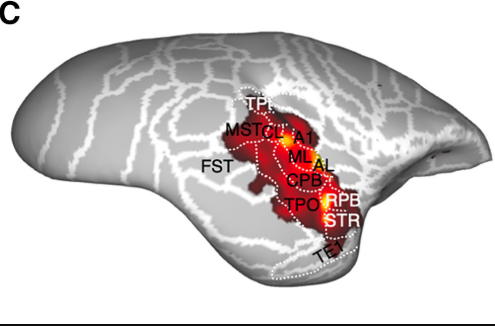

A population of neurons selective for human voice in the monkey brain

Proceedings of the National Academy of Sciences

Many animals can extract useful information from the vocalizations of other species. Neuroimaging studies have evidenced areas sensitive to conspecific vocalizations in the cerebral cortex of primates, but how these areas process heterospecific vocalizations remains unclear. Using fMRI-guided electrophysiology, we recorded the spiking activity of individual neurons in the anterior temporal voice patches of two macaques while they listened to complex sounds including vocalizations from several species. In addition to cells selective for conspecific macaque vocalizations, we identified an unsuspected subpopulation of neurons with strong selectivity for human voice, not merely explained by spectral or temporal structure of the sounds. The auditory representational geometry implemented by these neurons was strongly related to that measured in the human voice areas with neuroimaging and only weakly to low-level acoustical structure. These findings provide new insights into the neural mechanisms involved in auditory expertise and the evolution of communication systems in primates.

2023

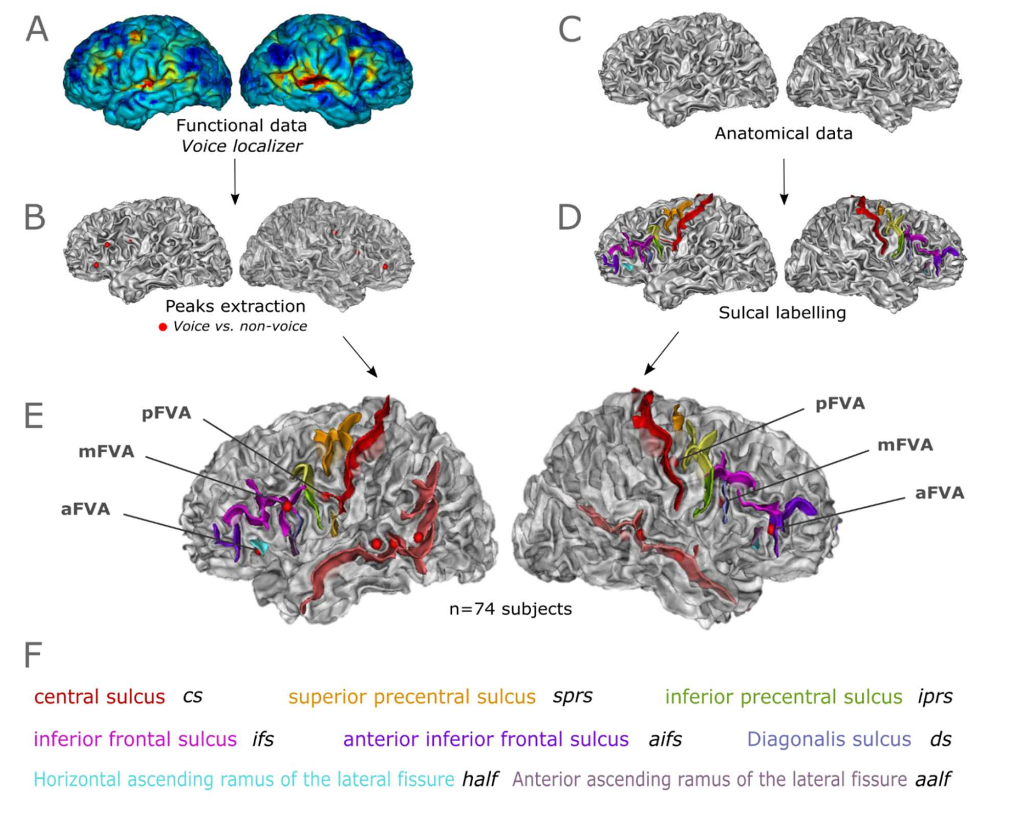

Anatomo-functional correspondence in the voice-selective regions of human prefrontal cortex

Neuroimage

Group level analyses of functional regions involved in voice perception show evidence of 3 sets of bilateral voice-sensitive activations in the human prefrontal cortex, named the anterior, middle and posterior Frontal Voice Areas (FVAs). However, the relationship with the underlying sulcal anatomy, highly variable in this region, is still unknown. We examined the inter-individual variability of the FVAs in conjunction with the sulcal anatomy. To do so, anatomical and functional MRI scans from 74 subjects were analyzed to generate individual contrast maps of the FVAs and relate them to each subject’s manually labeled prefrontal sulci. We report two major results. First, the frontal activations for the voice are significantly associated with the sulcal anatomy. Second, this correspondence with the sulcal anatomy at the individual level is a better predictor than coordinates in the MNI space. These findings offer new perspectives for the understanding of anatomical-functional correspondences in this complex cortical region. They also shed light on the importance of considering individual-specific variations in subject’s anatomy.

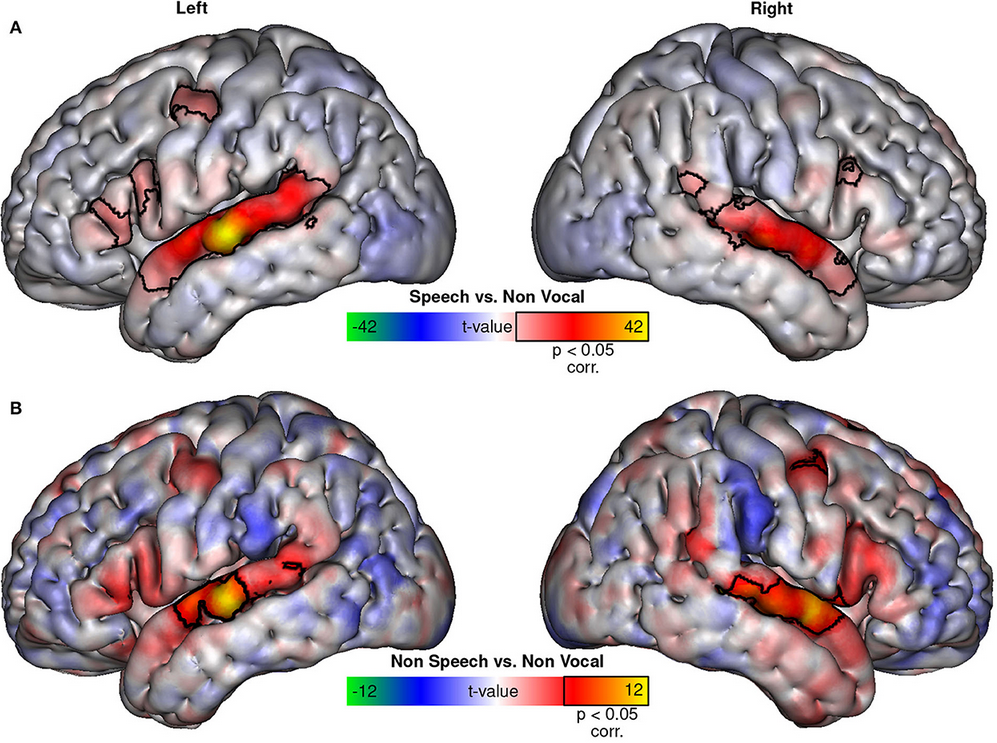

The Temporal Voice Areas are not “just” Speech Areas

Frontiers in Neuroscience

The Temporal Voice Areas (TVAs) respond more strongly to speech sounds than to non-speech vocal sounds, but does this make them Temporal “Speech” Areas? We provide a perspective on this issue by combining univariate, multivariate, and representational similarity analyses of fMRI activations to a balanced set of speech and non-speech vocal sounds. We find that while speech sounds activate the TVAs more than non-speech vocal sounds, which is likely related to their larger temporal modulations in syllabic rate, they do not appear to activate additional areas nor are they segregated from the non-speech vocal sounds when their higher activation is controlled. It seems safe, then, to continue calling these regions the Temporal Voice Areas.

A small, but vocal, brain

Cell Reports

In the May issue of Cell Reports, Jafari et al.1 used ultra-high-field fMRI to show that marmosets, like humans and macaques, possess an extensive network of voice-selective areas